I’ve got a proof stuck in my head.

It comes from a man as brilliant as he is grumpy. Last week in the staff room, one of my all-time favorite colleagues busted out a dozen-line proof of a world-famous theorem. Though I doubt it’s original to him, I shall dub it Deeley’s Ditty in his honor:

This argument is sneaky like a thief. I admire but don’t wholly trust it, because it solves a differential equation by separation of variables, a technique I still regard as black magic.

What I can’t deny is that it’s about 17 million times shorter than the proof I usually show to students, which works via Taylor series:

You might call this one Taylor’s Opus. It builds like a symphony, with distinct movements, powerful motifs, and a grand finale. It takes familiar objects (trig and exponential functions) and leaves them transformed. It’s rich, challenging, and complete.

It’s also as slow as an aircraft carrier making a three-point turn.

Here we have two proofs. The first is a nifty shortcut, persuasive but not explanatory. Watching it unfold, I’m unsure whether I’m witnessing a scientific demo or a magic trick.

The second is an elaborate work of architecture. It explains but perhaps overwhelms. If an argument is a connector between one idea and another, then for some students, this will feel like building the George Washington Bridge to span a creek.

The question it prompts, to me, is: What do we want from a proof?

Here’s another example. Week #1 of teaching here in England, I posed a classic challenge to my 14-year-olds: Prove that √2 is irrational. To my delight, it took only 90 seconds before one of them produced this clever argument, which I’ll call Dan’s Ditty:

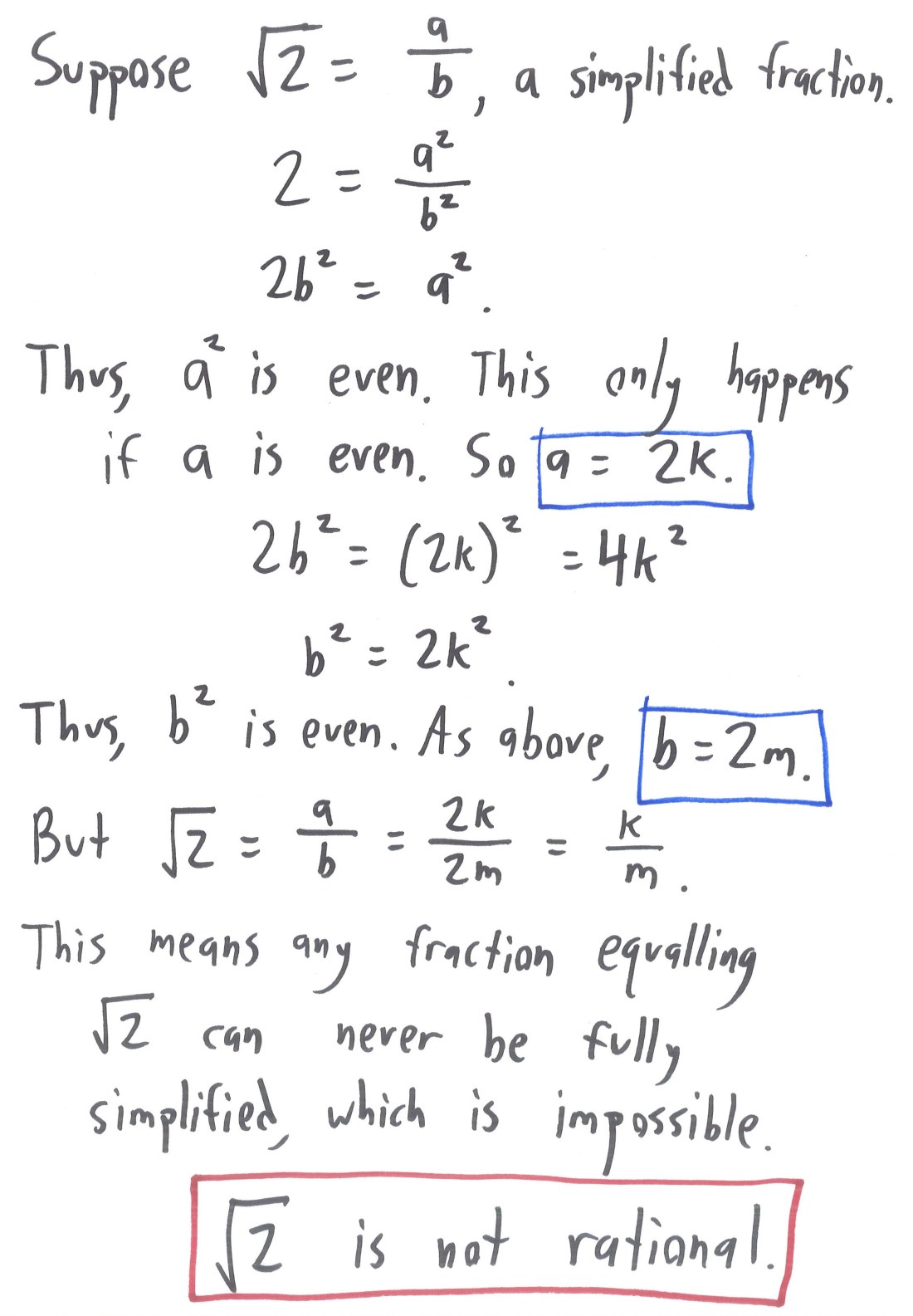

I loved it, finding it slicker and more satisfying than the standard proof I’d seen a dozen times:

Of course, I could also see the ditty’s downfall. It relies on the Fundamental Theorem of Arithmetic: the idea that each number has a unique prime factorization. That’s a nontrivial result, one I didn’t encounter until group theory in college. No such machinery is needed for the standard proof, which Hardy and Erdös (among others) hailed as one of the loveliest and most perfect in all of mathematics.

Holding the two ditties side by side, some themes emerge:

What do we want from a proof? I say it depends on the spirit in the room.

In the somber mood of scholarship, clad in academic gowns and posing for our portraits, we prize rigor and depth. The “standard proofs” are standard for good reason. They convey unambiguous truths through careful logic. They’ve stood the test of time better than just about any other work of the human mind.

But in playful moods, holding coffee in one hand and chalk in the other, there’s a lot to be said for the ditties. They’re fun. They provoke. They refresh. They’re like trying a new path on the commute home; coming at the street from the other side, you see a slightly different world.

Here’s a last and favorite example, which I heard from my boss Neil, who heard it from whoever he heard it from: A proof that all higher-order roots of 2 are irrational.

I find it hard not to smile at that one.

It’s as if Andrew Wiles has arrived at the top of Everest, only to notice that I’ve been riding piggyback the whole way. “Hey, what are you doing here?!” cries Sir Wiles, and by way of response, I grab a chunk of fresh Himalayan snow and drop it into my drink. “Needed ice,” I explain.

Even though an awful lot of this is “blah blah blah” to me (technical term) I do love your enjoyment of it, and your enthusiasm. And it’s “maths” by the way…

Thanks! I realize this is more technical than most of my posts so thanks for muscling through.

(And my British colleagues are delighted whenever I say “maths” now, which is about half the time. I’m going to get whiplash when I move back to the US.)

Please, don’t acquiesce to the “maths” nonsense! It is a truly vile and repugnant word. “Mathematics” should forever be the standard. 🙂

Have I told you lately how much I love your ideas! As refreshing as Himalayan snow!

🙂

Really nice!

I feel that the standard proof of irrationality of sqrt(2) is much more ‘fishy’ than Dan’s Ditty (which I didn’t know and really like.) Both proofs need some form of the Fundamental Theorem (or Euclid’s Lemma) but where Dan’s Ditty calls it to attention in a form which is useful outside just this proof (much like in Taylor’s Opus does), the standard proof sweeps it under the rug in the semi-obvious sentence ‘This only happens when a is even’.

There is a reason we used to follow up on the standard proof with the question ‘Ok, can you now use this method to prove that sqrt(3) is irrational?’ and, if the student figures that out, ‘Ok, can you now use this method to prove that sqrt(4) is irrational?’.

Hmm – I guess I hadn’t unpacked the assumptions in the standard proof! To demonstrate (2k)^2 = 4k^2 is even, and (2k+1)^2 = 4k^2 + 4k + 1 is odd, do we need anything more than definitions of “even” and “odd”? Does this rely on Euclid’s Proposition 30 in the background?

I also like those follow-up questions. Reading a proof is often a slightly passive learning experience, and applying to sqrt(3) and sqrt(4) gives a good check of understanding.

I’m not sure which one is number 30, but I meant the one that reads ‘If a prime number $p$ divides a product $ab$ then it divides $a$ or it divides $b$ (or both, of course). But you are right: in the special case where $p = 2$ you can verify this quickly without proving the full result thanks to a very special property of 2, namely that ‘being congruent 1 mod 2’ is the same as ‘not being divisible by 2’. This does not hold for p = 3, so applying to sqrt(3) really needs a new idea. But it is doable and a nice way to figure out for your self the concept of congruence classes as the proper generalization of odd and even in case no one had told you about them before.

Asking about sqrt(4) on the other hand is just pure evil, or in more friendly terms giving a good check of understanding as you put it.

Thanks for clarifying; your point about generalizing the standard proof to equivalence classes mod(n) goes well with the point others have made about the nice generalizations of Dan’s Ditty.

That proof by Fermat’s Last Theorem is circular.

Because the proof of FLT itself relies on the irrationality.

I don’t understand the technicalities here but looks plausible. Someone confirm:

https://mathoverflow.net/questions/42512/awfully-sophisticated-proof-for-simple-facts/42519#comment100811_42519

Yeah, my knowledge of FLT is no deeper than the statement of the theorem, but the explanation on Math Overflow looks pretty credible.

The circularity is a bit of a shame, although I still find the argument a fun toy.

Another alternative proof of a standard theorem, that the base angles of an equilateral triangle are equal. Let A be the point opposite the base as usual. Then the triangles ABC and ACB are congruent by SSS, and therefore the corresponding angles ABC and ACB are equal. No construction needed.

This was one of the first proofs found by a computer program, though it had been known (though obscure) before.

Ha! That’s really cute. I love the idea of the same triangle being congruent to itself. Clever computer.

Same proof works for any isosceles triangle, right? AB = AC, BC = CB, and CA = BA.

Yes, I meant to write “isosceles”.

Ah, makes sense! I was thinking that maybe the computer chose to prove it specifically for the equilateral case. The whims of computers remain pretty mysterious to me.

Nice post! Here’s several thoughts in no particular order…

When I looked closer, I discovered that both of the e^{i pi}=-1 proofs are actually just proofs that e^{i t} = cos t + i sin t with the final move subbing in pi. This fact was obscured in the ditty by not writing down explicitly that y=cos t + i sin t and y=e^{i t} means e^{i t}=cos t + i sin t. Indeed, it doesn’t even write down explicitly how we knew that when t=pi, that y=-1, which was subbing pi into cos t + i sin t. So maybe the ditty is more like the second proof than it at first looked.

Still, I agree that it is WAY less intimidating not to have all the Taylor series!

I find both proofs a little uncomfortable because both are done by doing real-number things (like solving differential equations and finding Taylor series) with complex numbers. Why can you expect to just be able to differentiate a complex-number function, or to just sub a complex number into the Taylor series? Indeed, the usual introduction to Taylor series is via differentiation so the issue of differentiating a complex number function is still there too.

So for me, they’re both just reasons to believe e^{i t} = cos t + i sin t as opposed to proofs per se. On the other hand, I really like having lots of reasons to believe things, even if they’re not proofs. In a “proper” proof you often get bogged down in a whole lot of other details, distracting from the coolness of the result itself. So I still like to have things that are more dittyish in my head. Enough of them and I can believe the result enough to want a more rigorous proof.

I really like Dan’s sqrt(2) proof! You can tell Dan I’m going to be using it with the students here at my university in the future.

Plus, I actually don’t think it’s all that far from the standard one after all. He used the full prime factorisation, but really you only need the 2’s rather than the whole thing. On one side there’s an odd number of 2’s (as opposed to prime factors) and on the other there’s an even number of 2’s (as opposed to prime factors). So you could make it just about the number 2 rather than the full prime factorisation without breaking the slickness of it.

Finally, I couldn’t resist sharing a couple of sqrt(2) proofs I’ve seen in the past http://blogs.adelaide.edu.au/maths-learning/2015/07/12/the-square-root-of-two/

Yes, the third of these seems to be really different from the one discussed up here. Quite spectacular! I know of one other proof that uses that same ‘very fundamental facts about integers’ as you nicely put it: the geometric proof discussed in ‘Figure 1’ of the Wikipedia page on Sqrt(2): https://en.wikipedia.org/wiki/Square_root_of_2. It is really worth looking at if you have never seen it before!

(Side note: I think it is a shame that Wikipedia put the algebraic expressions (a – b)^2 etc in the drawing because they are a bit of a red herring. The fact that the two white squares together have the same size as the middle dark grey square does not follow from expanding the brackets, but from the assumption that the (overlapping) light grey squares together have the same size as the outer square. Hence the non-covered part (white) of the outer square must be the same size as the doubly covered part (dark grey). But perhaps we need the expressions in terms of a and b to see that the smaller squares do indeed have integer sides.)

Ehm, in case it wasn’t clear: this was in reaction to the blog post you linked to at the very end of your comment…

Thanks, David – all really nice thoughts!

You’re right of course that there’s a leap in extending e^x and the trig functions to accept complex inputs by relying on the Taylor series; somehow it feels okay to me, though, akin to redefining sine as “the y-coordinate where the angle hits the unit circle” after years of thinking of it as “opposite/hypotenuse.” (Or, another example: extending the definition of a^b to include negative and non-integer b.) There’s some work to be done checking that the new definition agrees with the old one, but once that’s established, it feels satisfying to me. I suppose that’s an aesthetic judgment though – and not so different from your point that ditties are nice to have around for purposes of persuasion and illumination!

Regarding Dan’s Ditty, I seem to remember my real analysis text asking for the proof of the irrationality of the sqrt 2, specifically saying “do not use the fundamental theorem of arithmetic” is it had not been proven in the class.

As for Deely’s ditty, I am not sure that it is so much quicker than your own proof. Your proof is all of 10 lines. Sure you have more terms on each line, but it is about the same.

As I remember in complex analysis, we defined exp (ix) = cos x + i sin x. Euler’s identity was true by definition. Regardless of the fact that we may have a different definition of exp(x) from previous classes.

Years earlier my high-school teacher walked me through the Taylor series approach. And, I discovered Deely’s on my own. But, really Euler’s identity follows quite naturally from De Moivre’s theorem, and perhaps it should be introduced in pre-calculus.

And maybe it is just me… but I think that e^(pi i) = -1 is more aesthetically pleasing than e^(pi i) + 1 = 0

I suppose the Taylor series proof isn’t too much slower for students who know Taylor series; for my IB students, though, they aren’t covered in the course, so even a sketchy justification of those Taylor series is a lot to digest in a single lesson!

I enjoy Euler’s identity either way; I can’t resist the “putting together the five most important constants in mathematics” allure of the “=0” version, but I see where the “=-1” version is a little more parsimonious.

The difficulty with both proofs is that neither makes clear how e^{i t} is actually defined. I find that this is what tends to be swept under the carpet. Once this is made clear, the proof of de Moivre’s theorem usually just drops out.

I wrote a bit about this at http://loopspace.mathforge.org/CountingOnMyFingers/ButIsItArt/ It was in response to the pervasive idea of the beauty of Euler’s equation so is probably not as terse as it would be if I were writing it just to address your post. Still, I hope it’s useful.

Thanks – I’ll check it out!

Wow, I like Dan’s Ditty. For one thing, it is immediately obvious what happens when you apply it to sqrt (4): now you have an even number of prime factors on both sides, the possible becomes possible, and what was a contradiction becomes the formula to find the rational square root.

That’s a good point; as others are pointing out elsewhere in the comments, it generalizes really nicely.

Regarding the use of functions of complex numbers, I agree with Loopspace’s earlier comment.

Regarding your discomfort with separation of variables, I’m curious why you think it’s black magic. If you write integral symbols at the same time that you separate, you should hopefully see it as nothing more than chain rule. The apparent allowance for separating dy and dx is inherited from the apparent ability (via chain rule) to treat Leibniz notation dy/dx as a fraction.

In fact, if it bothers you so much, leave dy/dx on the “y” side and integrate both sides with respect to x, and you’ll see the substitution method that you teach your calculus students (which is just “backwards” chain rule).

You’re totally right! A commenter on Facebook pointed this out to me and of course it’s nothing but the chain rule. I never took a class on ODEs (one of many oceanic gaps in my math knowledge) so I’d never put in the few minutes to convince myself that it’s all above board. I’m persuaded now.

From my viewpoint, the inherent playfulness of showing contrasting proofs is perhaps the greatest benefit to a room full of students, especially younger students or those who’ve been given less engaging instruction previously.

I get positively riled up by the narrow view of mathematics as a subject in American K-12 schools. It’s a narrow ladder one climbs by applying correct calculations… but the most interesting bits shouldn’t all obscured in the clouds!

Presenting alternate proofs may be the first time someone sees the presentation of two valid paths through a math problem. One way may be more intuitive, or more comfortable, or just more interesting to that person. That’s a coup!

I touched on this in a recent post that had more to do with how women (like me) need to talk about math:

http://reallywonderfulthings.me/2017/05/17/being-good-at-math-also-female-and-why-i-must-talk-about-that/

I consider myself enlisted in the battle to allow young people to discover what’s awesome about math. I mean awe-inspiringly powerful, worthy of admiration and respect, not just “super cool lip service” that STEM is good.

Sorry if I’m taking this too far afield. Once again, I really enjoyed your post. Thanks for what you write (and draw.) 🙂

Thanks for reading!

I think you’re right that alternate proofs make a particularly good conversation starters for deeper discussions about mathematics. Lots of key themes in math (elegance vs. brute force, concreteness vs. generality, rigor vs. intuition, concision vs. exhaustiveness) emerge only from contrasts. It’s hard to know what to value or appreciate in a piece of mathematics until you see another piece with different merits.

What I like about “Dans ditty” is that it can be very easily generalized to proving the nth root of a number is irrational if it is not an nth power

Well said!

I somewhat accidentally realized a similar sort of ditty, but with Gödel’s incompleteness theorem, when learning about the compactness theorem. The fact that the natural numbers can’t be axiomatized is dependent on the compactness theorem, and the compactness theorem, in turn, is dependent on the completeness theorem. Thus, using modus tollens a few times, the ability to axiomatize the natural numbers implies that that logic is not complete, and in particular, second order logic is not complete beacuse it can model the natural numbers.

So, it’s been a bit of a while since I posted this, and only slightly less since I knew why I was wrong. The result itself isn’t wrong, it’s just that this isn’t Gödel’s incompleteness theorem. In fact, Gödel’s theorem applies to first order logic as well, which is confusing for newcomers, because “first order logic has a completeness theorem, how can an incompleteness theorem hold?”, which isn’t a bad question, without knowing what the incompleteness theorem actually says. The incompleteness theorem essentially says, “if a language can prove some arithmetic, there is some unprovable true statement,” which again seems to contradict completeness, as it says “a theorem is true if and only if it is provable,” which easily implies “there is no unprovable true statement.” This is a valid argument, if you assume “true” means the same thing in both situations, which it doesn’t. In the first statement, “true” means true in the standard model, the normal natural numbers, while in the second, “true” means true in every model, not just the “intended” one. There are non-standard models in first order logic, meaning the completeness theorem still holds, the statement that’s true in the standard natural numbers is just false in the non-standard natural numbers, meaning it’s not true in every model. Unfortunately, since second order logic doesn’t have non-standard natural numbers the incompleteness theorem breaks in and makes it impossible for there to be a completeness theorem for second order logic, which is what I showed above.

Actually, neither Gődel’s first nor second theorem excludes the possibility that ~G, the negation of the Gődel string, has a finite proof in standard number theory. If that were true, then there would be no standard number theory and no bifurcation of theory at all: non-standard numbers would unconditionally exist, since it is G, the negation of ~G, that says they don’t. That would be very strange and unlikely, but not impossible! It may seem strange to think that G is false but (meta-mathematically speaking) has a proof, but this is of no consequence: the proof is infinitely long and can’t be constructed.

Just something I noted is that in Dan’s Ditty it said that a square has an even number of prime factors … which is wrong.

Any even power of a prime (and any number that is the product of such powers) has odd factors, Dan is counting 1 twice there. For example: 4 has 3 factors (1,2,4), 81 has 5 (1, 3, 9, 27, 81) and 100 has 9 (1, 2, 4, 5, 10, 20, 25, 50, 100).

Unless he isn’t counting 1 as a factor, in which case it all does check out

Prime factors. Prime. The number 1 is not prime.

Also, your examples list all sorts of composite factors. The number 81 does not have 5 prime factors. It has a single prime factor, namely 3, repeated 4 times. The numbers 1, 9, 27, and 81 are not prime.

About your first example: Separation of variables is actually a lot safer than rearranging terms of an infinite series. Rearranging terms of an infinite series is only safe after you prove absolute convergence. Doing this without absolute convergence is exactly how people “prove” that the sum of (-1)^n is 0, or 1, or 1/2, or any number they want. And that leads directly to 0=1. So the taylor series proof is actually more of a trick that the ditty in this example.

You can do the differential equations proof that $e^{it}=cos t+i sin t$ using uniqueness of solutions to initial value problems instead of separation of variables. $y=e^{it}$ and $y=cos t+i sin t$ are both solutions to $y’=iy$, $y(0)=1$ [or, if you prefer, to $y”+y=0$, $y(0)=1$, $y'(0)=i$], and so by uniqueness of solutions they must be equal.

I assign the second-order version of this argument as extra credit on the second exam every time I teach differential equations to engineering students.

I rather like that proof. But let’s put it in perspective.

We have the real numbers, and we like the idea of having a square root of -1. So we invent the complex numbers.

So far, so good. But still doesn’t mean anything. We have to first extend our notational conventions so as to give that a meaning. And the Taylor series expansion is what suggests a good way to assign meaning to use of complex exponents.

still doesn’t mean anything. We have to first extend our notational conventions so as to give that a meaning. And the Taylor series expansion is what suggests a good way to assign meaning to use of complex exponents.

Oh, and by the way, it is “math”, not “maths”. It used to be “maths” until I moved from Australia to USA. And then it became “math”. But to each his own spelling and pronunciation.